Powering ethical and safe AI with API simulation

Building trust, ensuring transparency and facilitating regulation

Agentic AI brings with it incredible potential, but also significant societal questions, particularly around trust, transparency, and regulation. As AI systems gain autonomy and agency, the "black box" concern – the inability to understand how an AI makes decisions – becomes more pressing. Simultaneously, governments globally are enacting legislation, most notably the EU AI Act, to ensure safe AI development and deployment.

In this evolving landscape, how can organisations not only innovate with AI but also build systems that are inherently trustworthy and demonstrably compliant? The answer, surprisingly, often circles back to the foundational practice of API simulation. This builds directly upon our commitment to Compliant and Secure Application Testing, extending its principles to the specific challenges of the AI era.

The new imperative: compliant and trustworthy AI

The era of Agentic AI isn't just about technological advancement; it's about responsible innovation. Regulators are stepping in to ensure AI doesn't harm individuals or society. Key themes emerging from regulations like the EU AI Act include:

- Transparency: Users and stakeholders need to understand the logic, capabilities, and limitations of AI systems

- Accountability: Clear responsibility for AI system outcomes must be established

- Safety: AI systems must be designed, developed, and used in a way that minimises risk of harm

- Robustness: AI systems should be resilient to errors, faults, and inconsistencies.

- Human oversight: Where appropriate, humans should retain the ability to intervene and override AI decisions

For AI agents that predominantly interact with the world via APIs, demonstrating compliance and building trust fundamentally relies on understanding and controlling these interactions.

How API simulation becomes your AI compliant catalyst

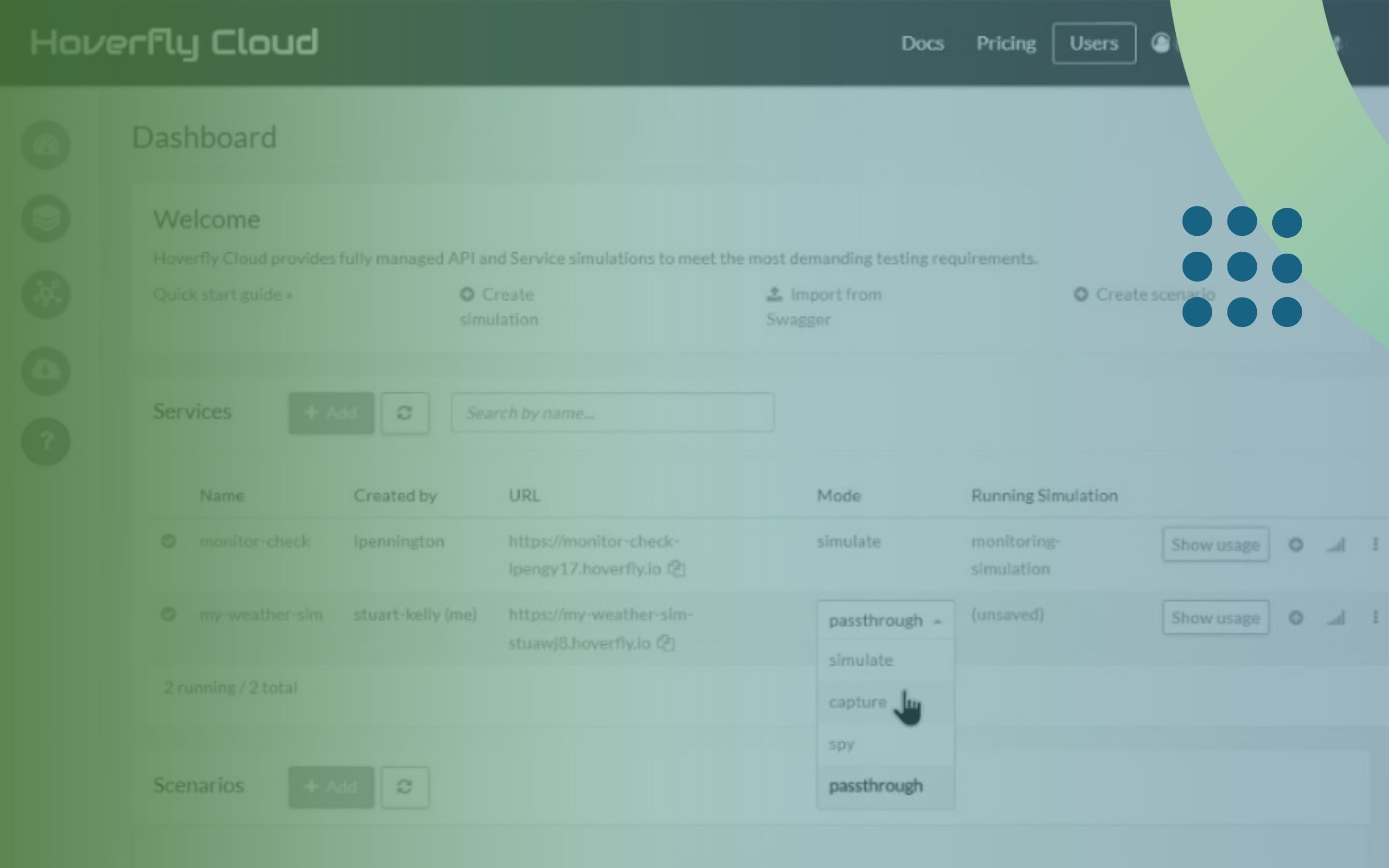

API simulation, particularly with a sophisticated platform like Hoverfly Cloud, directly addresses these regulatory and trust imperatives by enabling:

- Demonstrable safety and robustness (EU AI Act - Article 15)

The EU AI Act, for "high-risk" AI systems, mandates rigorous testing and validation for robustness, accuracy, and security. API simulation is a direct enabler:

- Comprehensive stress testing: Simulate extreme load conditions or a vast array of unexpected API responses (as discussed with Hoverfly Cloud's Simulate Mode or Spy Mode) to verify that your AI agent remains stable, performs reliably, and doesn't crash or behave unpredictably under duress

- Failure mode analysis: By precisely simulating API errors, network latency, or corrupted data using Simulate Mode, you can prove that your AI agents gracefully handle failures, implement fallback mechanisms, and do not lead to cascading system failures. This goes directly to the "resilience" requirement

- Controlled security testing: Simulate malicious API inputs (injection attempts, unauthorised access attempts) using Simulate Mode to verify your AI agent's resilience against cyber threats, a crucial aspect of overall system security

- Enhanced transparency and explainability (EU AI Act - Article 13)

While AI models themselves can be complex, their interaction points with the outside world (APIs) can be made transparent through simulation:

- Auditable interaction logs: Every simulated API call made by your AI agent, along with the precise simulated response received, (especially when using Capture Mode or Simulate Mode), creates an auditable trail. This allows developers, QA teams, and even auditors to reconstruct and understand exactly how the AI agent interacted with its external environment during testing

- Predictable behaviour validation: By defining clear simulation rules in Simulate Mode you ensure the API environment for testing is predictable. If an AI agent behaves unexpectedly, the controlled simulated environment helps pinpoint whether the issue lies in the AI's logic or an assumption about the API. This moves from a "black box" to a "controlled box" for external interactions

- Scenario-based explanation: For a specific AI decision, you can show exactly which API calls were made, what simulated responses were received (from Capture Mode or Simulate Mode),and how those responses influenced the AI's subsequent actions, aiding in post-hoc explanation of AI behaviour

Access the full potential of Hoverfly's API Simulation modes. Book a free demo today and see how we can streamline safe AI development and your software testing process.

- Data privacy and security by design (GDPR, CCPA, PCI DSS, EU AI Act)

The foundational benefit of API simulation, as highlighted in our first article, is the elimination of real sensitive data from non-production environments. This is paramount for compliance:

- No real data exposure: By using synthetic data within simulated API responses, in Simulate Mode you inherently comply with strict data handling regulations (GDPR, CCPA, PCI DSS, etc.) during development and testing, significantly reducing the risk of costly breaches and non-compliance penalties

- Reduced compliance overhead: Demonstrating to auditors that sensitive data is never used in test environments becomes straightforward and undeniable. This streamlines auditing processes and reduces the burden of complex data masking or anonymisation

- Accelerating responsible innovation

Compliance and trust shouldn't stifle innovation; they should guide it. API simulation accelerates responsible AI development:

- Shift-left for safety: Developers can integrate security and compliance considerations into the earliest stages of AI agent development by using simulated APIs via Capture Mode and Simulate Mode, catching potential issues long before they become expensive problems in production

- Faster iteration cycles: Without dependencies on external systems or lengthy compliance reviews for test data, AI teams can iterate on their models and interaction logic at unprecedented speeds, while maintaining a high standard of safety

Hoverfly Cloud is your partner in compliant AI development

Hoverfly Cloud is uniquely positioned to empower organisations in building compliant and trustworthy AI systems. Our focus on precise, controlled, and scalable API simulation provides the essential toolkit to:

- Prove the robustness and safety of your AI agents using Capture Mode, Simulate Mode, and Spy Mode. Find out more about each Hoverfly mode here

- Create auditable records of AI-API interactions for transparency.

- Ensure absolute data safety throughout the development lifecycle.

- Accelerate your journey towards ethical and responsible AI deployment.

As AI agents become increasingly integral to business operations, the focus on their safety, transparency, and adherence to regulatory frameworks will only intensify. By embracing advanced API simulation with Hoverfly Cloud, you're not just testing applications; you're actively building the foundations of a trusted and compliant AI future.

Build the next generation of AI with confidence and compliance. Contact us today for a personalised demonstration of how Hoverfly Cloud can secure and accelerate your AI development initiatives.

Share this

You May Also Like

These Related Stories

Simulation modes for secure agentic AI development

API simulation is crucial for agentic AI development